Recurrent Neural Networks (RNNs) are a class of artificial neural networks designed to work with sequential data, where the order of the data points matters. They are particularly suitable for tasks like natural language processing (NLP), time series prediction, speech recognition, and more. Here's a detailed explanation of RNNs, their advantages, disadvantages, use cases, and their role as a stepping stone in the journey of AI:

Detailed Explanation of RNN:

1. Architecture:

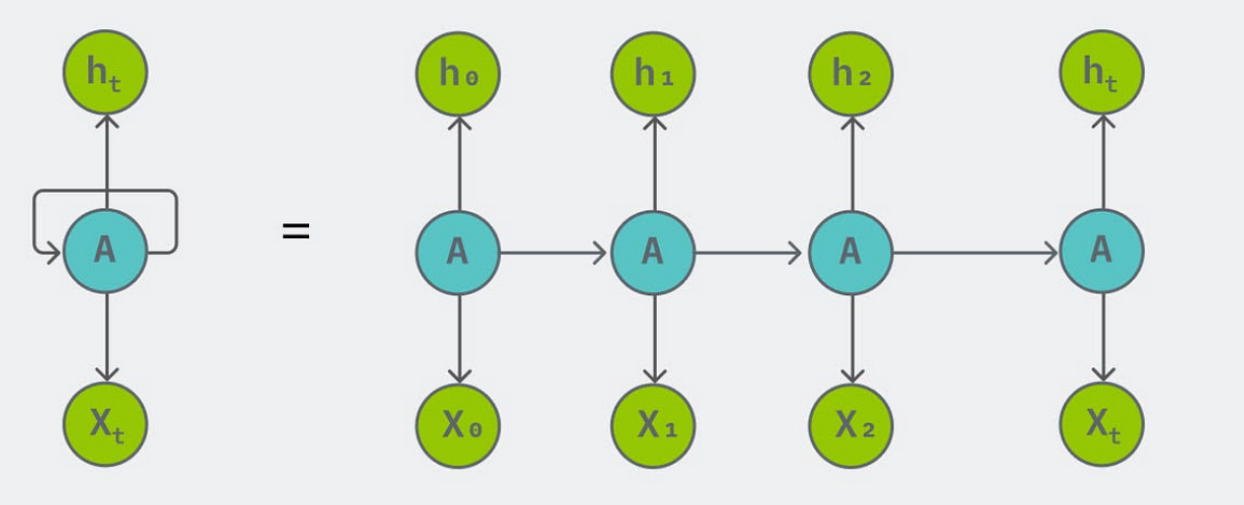

- RNNs are characterized by their recurrent connections, where the output of a neuron is fed back into the network as input for the next time step.

- This recurrent nature allows RNNs to exhibit dynamic temporal behavior, making them well-suited for tasks where context or history is crucial.

Image showing how output of a neuron is fed into the network

2. Structure:

- At each time step \( t \), an RNN receives input \( x_t \) and produces output \( y_t \).

- RNNs maintain a hidden state \( h_t \), which serves as memory that captures information from previous time steps.

- The hidden state \( h_t \) is updated based on the current input \( x_t \) and the previous hidden state \( h_{t-1} \), along with learnable parameters.

3. Training:

- RNNs are typically trained using backpropagation through time (BPTT), an extension of backpropagation for sequential data.

- BPTT unfolds the network through time, treating each time step as a separate layer, and applies standard backpropagation to compute gradients.

Advantages of RNN:

1. Sequential Modeling:

- RNNs excel in modeling sequential data due to their ability to capture temporal dependencies.

- They can process input sequences of varying lengths, making them versatile for various tasks.

2. Flexibility:

- RNNs can handle inputs and outputs of different types (e.g., text, audio, time series) and perform tasks such as sequence generation, classification, and regression.

3. Memory:

- The recurrent connections enable RNNs to maintain a memory of previous inputs, allowing them to learn and infer patterns across time.

Disadvantages of RNN:

1. Vanishing/Exploding Gradient

- RNNs are susceptible to the vanishing or exploding gradient problem, where gradients either diminish or explode as they propagate through time.

- This can lead to difficulties in learning long-term dependencies.

2. Short-Term Memory

- Traditional RNNs have limitations in capturing long-range dependencies, as they struggle to retain information over many time steps.

Use Cases of RNN:

1. Natural Language Processing (NLP)

- RNNs are widely used for tasks like language modeling, machine translation, sentiment analysis, and named entity recognition.

2. Time Series Prediction

- RNNs can forecast future values in time series data, making them valuable for applications like stock market prediction, weather forecasting, and demand forecasting.

3. Speech Recognition

- RNNs can process sequential audio data for tasks such as speech recognition, speech synthesis, and speaker identification.

RNN as a Stepping Stone for AI:

1. Historical Significance:

- RNNs paved the way for more advanced architectures, such as Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs), which address the vanishing gradient problem and enhance the memory capacity of the network.

2. Incremental Improvement:

- While RNNs have limitations, their development and exploration have contributed to the evolution of neural network architectures, leading to more powerful models capable of handling complex tasks.

3. Foundation for Deep Learning:

- RNNs, along with other neural network architectures, form the foundation of deep learning, providing the groundwork for the development of sophisticated AI systems capable of understanding and generating sequential data.

In summary, RNNs are a fundamental class of neural networks known for their ability to model sequential data. Despite their limitations, they have found widespread applications in various domains and have played a significant role in advancing the field of AI, serving as a stepping stone for the development of more advanced architectures and algorithms.