Navigating the Road to Artificial General Intelligence: A Step-by-Step Journey

In the realm of Artificial Intelligence (AI), the concept of Artificial General Intelligence (AGI) stands as the ultimate frontier—a machine possessing the cognitive abilities to understand, learn, and apply knowledge across diverse domains with human-like proficiency. Yet, the path to AGI is not a magic leap; it's a meticulously planned and executed journey, marked by incremental advancements and architectural innovations. In this comprehensive exploration, we delve into the architectural milestones, the pros and cons of each, and how they converge to pave the way for AGI.

Foundational Blocks: Building the Bedrock

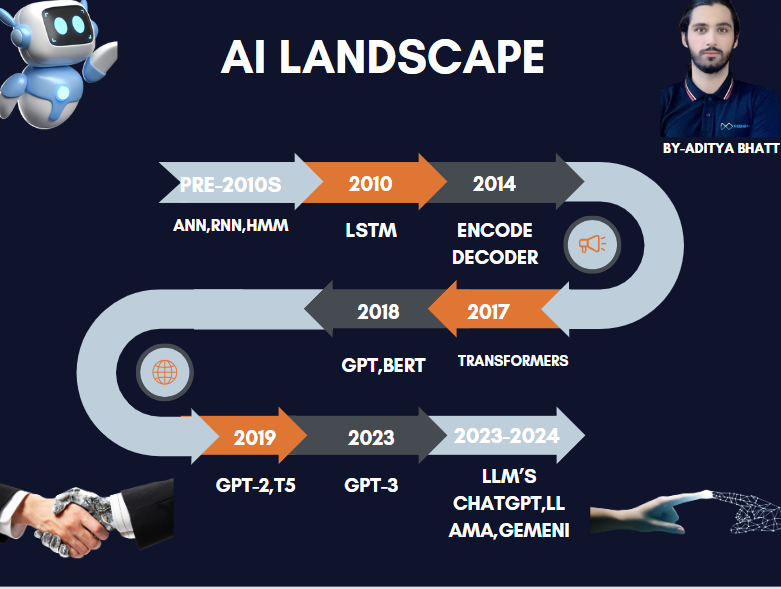

Our journey begins with the foundational blocks laid in the early days of AI research. Symbolic AI and Expert Systems (1950s-1980s) set the stage, employing rule-based systems to represent structured knowledge. However, these systems grappled with uncertainty and lacked adaptability. The torch was then passed to Statistical Approaches (1990s), ushering in probabilistic models like Hidden Markov Models (HMMs) and neural networks, which could learn from data and adapt flexibly. The era of Deep Learning (2000s) brought about breakthroughs with Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), enabling the processing of unstructured data like images, audio, and text.

Sequence Modeling and Language Understanding: A Shift in Paradigm

As our understanding of AI deepened, so did our approach to sequence modeling and language understanding. Long Short-Term Memory (LSTM) networks and Encoder-Decoder Architectures (2010s) tackled the challenge of capturing temporal dependencies, laying the groundwork for tasks like machine translation. The introduction of the Transformer Architecture (2017) marked a paradigm shift, leveraging attention mechanisms to capture long-range dependencies more effectively. This architectural leap propelled advancements in language translation and text generation to unprecedented heights.

Harnessing Pre-trained Models and Transfer Learning

The advent of pre-trained models and transfer learning revolutionized the landscape of AI, empowering models with vast amounts of pre-existing knowledge. Generative Pre-trained Transformers (GPT) (2018) and Bidirectional Encoder Representations from Transformers (BERT) (2018) emerged as pioneers, equipped with large-scale transformer architectures pre-trained on extensive corpora of text data. With models like GPT-3 (2020), boasting a staggering 175 billion parameters, AI transcended previous limitations, demonstrating prowess across a spectrum of natural language processing tasks.

Conversational AI: The Human Touch

Conversations, the cornerstone of human interaction, posed a unique challenge for AI. Early attempts with rule-based systems and retrieval methods paved the way for Chatbots and Dialogue Systems (2010s). However, it was with the advent of ChatGPT (2024) that conversational AI witnessed a significant leap forward. As a further iteration of large language models, ChatGPT potentially integrates advancements in architecture, training techniques, and fine-tuning strategies, enhancing its conversational abilities and contextual understanding.

Pros and Cons: The Balancing Act

The step-by-step approach to AGI presents a blend of opportunities and challenges. Incremental advancements foster steady progress, offering insights into the inner workings of intelligence. Modularity allows for isolated improvements, while versatility finds applications beyond initial domains. However, complexity poses interpretability and scalability concerns, demanding careful navigation. Resource-intensive development and ethical dilemmas add layers of complexity, urging a balance between progress and responsibility.

Learning AI: A Path Forward

For those embarking on the journey of AI exploration, resources like the YouTube series linked here offer a roadmap to understanding AI architectures and advancements. Through hands-on experimentation and theoretical exploration, aspiring enthusiasts can gain invaluable insights and skills in AI development, contributing to the collective pursuit of AGI.

In conclusion, the road to Artificial General Intelligence is not a sprint but a marathon—a journey of continuous learning, experimentation, and collaboration. By embracing a step-by-step approach and navigating the complexities with diligence and foresight, we inch closer towards the realization of AGI, shaping a future where intelligence transcends boundaries and empowers humanity.