Unleashing the Power of Retrieval-Augmented Generation (RAG): Revolutionizing Language Models with Real-World Knowledge

In the ever-evolving realm of artificial intelligence, where innovation drives progress, a groundbreaking paradigm has emerged, poised to redefine the capabilities of language models and propel them into a new era of effectiveness and reliability. Enter Retrieval-Augmented Generation (RAG), a transformative approach that seamlessly integrates external knowledge sources with language models, unlocking a realm of possibilities previously thought unattainable. Join us on an exhilarating exploration as we uncover the magic of RAG and delve into its unparalleled advantages in revolutionizing language models.

Solving the Limitations of Traditional Language Models

Traditional language models have long grappled with the constraints of static knowledge and factual inconsistency. These models, while powerful, often struggle to provide accurate and up-to-date responses, leaving users with unreliable information and diminishing trust in AI-driven solutions. However, RAG confronts these challenges head-on by bridging the gap between static training data and the dynamic world of real-time information. By seamlessly integrating external knowledge sources, RAG ensures that language models remain relevant, reliable, and responsive in an ever-changing environment.

Harnessing the Power of Your Data

One of the most compelling advantages of RAG lies in its ability to leverage your organization's data to enhance the capabilities of language models. By incorporating relevant information from your data repositories into the prompt context for language models, RAG enables these models to generate responses that are not only accurate and reliable but also tailored to the specific needs of your organization. Whether it's customer support queries, internal knowledge sharing, or domain-specific applications, RAG empowers language models to provide contextually relevant responses that drive value and efficiency across your organization.

Improving LLMs with Real-World Knowledge

The integration of external knowledge sources through RAG fundamentally transforms the capabilities of language models, unlocking a myriad of benefits that propel AI-driven solutions to new heights of effectiveness and reliability. By enriching language model prompts with real-world knowledge, RAG enables these models to generate responses that are more accurate, relevant, and actionable. Whether it's providing domain-specific answers in customer support chatbots, augmenting search results with real-time information, or empowering employees with instant access to internal knowledge repositories, RAG enhances the capabilities of language models in ways previously thought impossible.

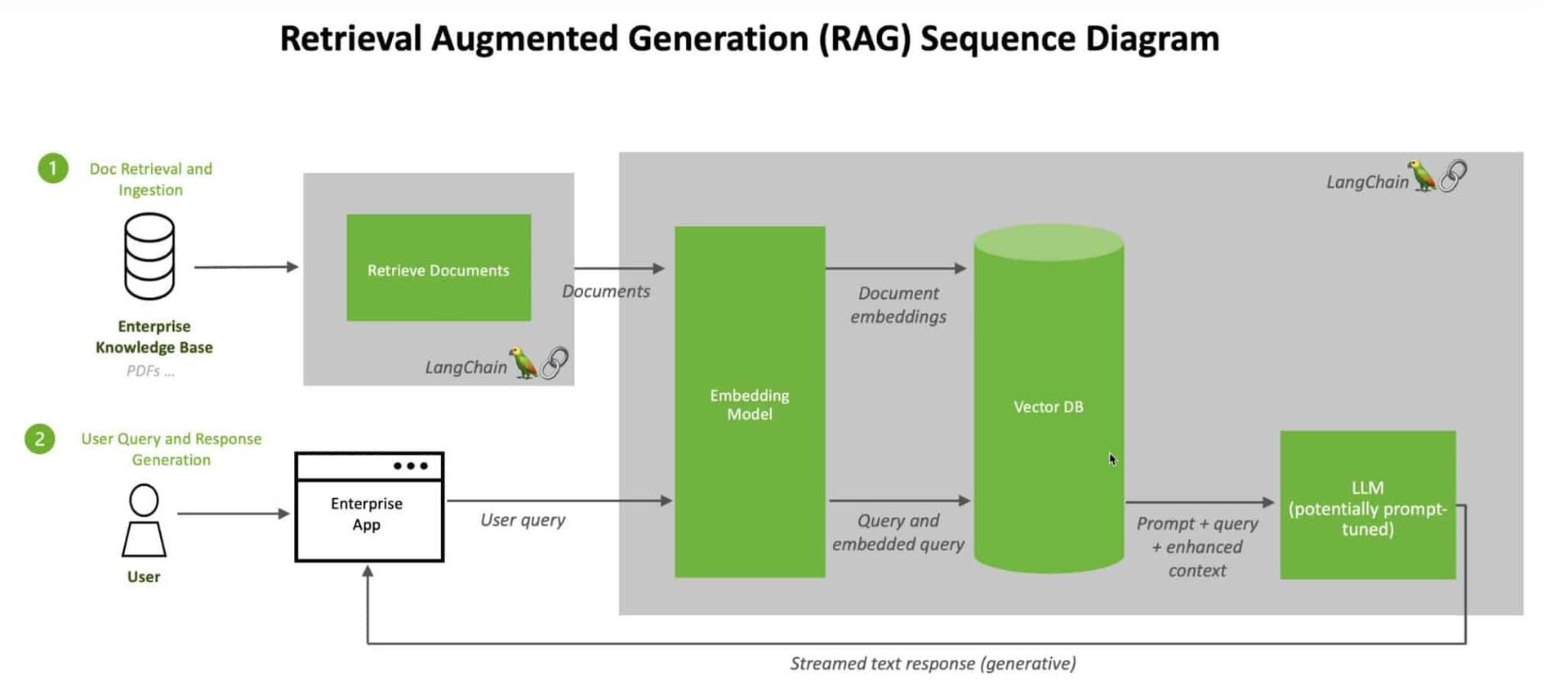

Navigating the RAG Workflow: From Data Preparation to Deployment

At the heart of RAG lies a meticulously orchestrated workflow, designed to maximize the potential of language models while minimizing complexity. The journey begins with data preparation, where document data is gathered, preprocessed, and chunked into appropriate lengths. Next, relevant data is indexed, producing document embeddings and hydrating a Vector Search index. Then comes the crucial step of retrieving relevant data, where parts of the data are retrieved based on a user's query and provided as part of the prompt for the language model. Finally, LLM applications are built, wrapping the components of prompt augmentation and querying the language model into an endpoint that can be exposed to applications such as Q&A chatbots via a simple REST API.

Unlocking the Future of AI with RAG

In conclusion, the power of Retrieval-Augmented Generation (RAG) lies not only in its ability to enhance the capabilities of language models but also in its potential to transform the way we interact with and harness the power of AI. By seamlessly integrating external knowledge sources with language models, RAG opens doors to a world of possibilities, empowering organizations to unlock new insights, drive innovation, and navigate the ever-changing landscape of information with confidence and clarity. As we continue to explore the boundless potential of RAG, one thing remains clear: the future of AI has never looked brighter.